第16章、MySQL高可用和读写分离架构

1.MHA高可用架构部署

。准备环境(必须配置好1主2从开启GTID)

。上传文件到主从库/server/tools,三个节点都必须

mha4mysql-manager-0.56-0.el6.noarch.rpm

mha4mysql-node-0.56-0.el6.noarch.rpm

。配置关键程序软连接(所有节点)

因为mha只能从/usr/bin中进行调用

ln -s /application/mysql/bin/mysqlbinlog /usr/bin/mysqlbinlog

ln -s /application/mysql/bin/mysql /usr/bin/mysql

。配置各节点互信

db01:

rm -rf /root/.ssh

ssh-keygen

cd /root/.ssh

mv id_rsa.pub authorized_keys

scp -r /root/.ssh root@192.168.58.52:/root #linux用户的密码

scp -r /root/.ssh root@192.168.58.53:/root #linux用户的密码

。各节点验证

db01:

ssh 192.168.58.51 date

ssh 192.168.58.52 date

ssh 192.168.58.53 date

db02:

ssh 192.168.58.51 date

ssh 192.168.58.52 date

ssh 192.168.58.53 date

db03:

ssh 192.168.58.51 date

ssh 192.168.58.52 date

ssh 192.168.58.53 date

。安装软件

下载mha软件

mha官网:https://code.google.com/archive/p/mysql-master-ha/

github下载地址:https://github.com/yoshinorim/mha4mysql-manager/wiki/Downloads

# 安装扩展源(已经有yum源的可忽略)

wget http://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

rpm -ivh epel-release-latest-7.noarch.rpm

# 安装依赖包

yum install perl-DBD-MySQL perl-Config-Tiny perl-Log-Dispatch perl-Parallel- ForkManager -y

# 安装node包v0.58

wget https://github.com/yoshinorim/mha4mysql- node/releases/download/v0.58/mha4mysql-node-0.58-0.el7.centos.noarch.rpm

rpm -ivh mha4mysql-node-0.58-0.el7.centos.noarch.rpm

# 安装manager包v0.58

wget https://github.com/yoshinorim/mha4mysql- manager/releases/download/v0.58/mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

rpm -ivh mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

所有节点安装Node软件依赖包

cd /server/tools

yum install perl-DBD-MySQL -y

rpm -ivh mha4mysql-node-0.56-0.el6.noarch.rpm

Manager软件安装(db03)

yum install -y perl-Config-Tiny epel-release perl-Log-Dispatch perl-Parallel-ForkManager perl-Time-HiRes

rpm -ivh mha4mysql-manager-0.56-0.el6.noarch.rpm

。在db01主库中创建mha需要的用户(由于是主从复制,其他主机上也都会安装)

create user mha@‘192.168.58.%’ identified by ‘123456’;

grant all privileges on *.* to mha@'192.168.58.%';

。配置文件准备(db03)

创建配置文件目录

mkdir -p /etc/mha

创建日志目录

mkdir -p /var/log/mha/app1

编辑mha配置文件

cat > /etc/mha/app1.cnf<<EOF

[server default]

manager_log=/var/log/mha/app1/manager

manager_workdir=/var/log/mha/app1

master_binlog_dir=/application/mysql/data/logs

user=mha

password=123456

ping_interval=2

repl_password=123456

repl_user=repl

ssh_user=root

[server1]

hostname=192.168.58.51

port=3306

[server2]

hostname=192.168.58.52

port=3306

[server3]

hostname=192.168.58.53

port=3306

EOF

。状态检查(db03)

masterha_check_ssh –conf=/etc/mha/app1.cnf #互信检查

All SSH connection tests passed successfully.正常,互信成功

masterha_check_repl –conf=/etc/mha/app1.cnf #主从复制检查

MySQL Replication Health is OK. 主从复制关系正常

。开启MHA(db03):

nohup masterha_manager –conf=/etc/mha/app1.cnf –remove_dead_master_conf –ignore_last_failover < /dev/null> /var/log/mha/app1/manager.log 2>&1 &

。查看MHA状态(db03)

masterha_check_status –conf=/etc/mha/app1.cnf

app1 (pid:1912) is running(0:PING_OK), master:192.168.58.51 (mha高可用架构搭建成功)

2.主库宕机故障模拟及处理

。停主库db01:

观察manager 日志 tail -f /var/log/mha/app1/manager

末尾必须显示successfully,才算正常切换成功。

。修复故障库:

启动故障数据库

mysqld –defaults-file=/data/mysql/mysql3306/my3306.cnf &

恢复主从结构

db01:

CHANGE MASTER TO

MASTER_HOST=‘192.168.58.52’,

MASTER_PORT=3306,

MASTER_USER=‘repl’,

MASTER_PASSWORD=‘123456’,

MASTER_AUTO_POSITION=1;

start slave ;

。修改配置文件 (db03)

vim /etc/mha/app1.cnf

[server1]

hostname=192.168.58.51

port=3306

检查

masterha_check_ssh –conf=/etc/mha/app1.cnf #互信检查

All SSH connection tests passed successfully.正常,互信成功

masterha_check_repl –conf=/etc/mha/app1.cnf #主从复制检查

MySQL Replication Health is OK. 主从复制关系正常

。启动MHA(db03)

nohup masterha_manager –conf=/etc/mha/app1.cnf –remove_dead_master_conf –ignore_last_failover < /dev/null> /var/log/mha/app1/manager.log 2>&1 &

。查看MHA状态(db03)

masterha_check_status –conf=/etc/mha/app1.cnf

app1 (pid:19347) is running(0:PING_OK), master:192.168.58.52

关闭:masterha_stop –conf=/etc/mha/app1.cnf

3.MHA VIP自动切换

db03操作:

vim /usr/local/bin/master_ip_failover

内容:

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

my $vip = '192.168.58.59/24';

my $key = '1';

my $ssh_start_vip = "/sbin/ifconfig eth0:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig eth0:$key down";

GetOptions(

'command=s' => $command,

'ssh_user=s' => $ssh_user,

'orig_master_host=s' => $orig_master_host,

'orig_master_ip=s' => $orig_master_ip,

'orig_master_port=i' => $orig_master_port,

'new_master_host=s' => $new_master_host,

'new_master_ip=s' => $new_master_ip,

'new_master_port=i' => $new_master_port,

);

exit &main();

sub main {

print "

IN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===

";

if ( $command eq "stop" || $command eq "stopssh" ) {

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host

";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@

";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host

";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK

";

exit 0;

}

else {

&usage();

exit 1;

}

}

sub start_vip() {

`ssh $ssh_user@$new_master_host " $ssh_start_vip "`;

}

sub stop_vip() {

return 0 unless ($ssh_user);

`ssh $ssh_user@$orig_master_host " $ssh_stop_vip "`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port

";

}

dos2unix /usr/local/bin/master_ip_failover 让文件适合linux系统

chmod +x /usr/local/bin/master_ip_failover

更改manager配置文件:

vim /etc/mha/app1.cnf

添加:在【serverdefault中】

master_ip_failover_script=/usr/local/bin/master_ip_failover

在主库上,手工生成第一个vip地址

手工在主库上绑定vip,注意一定要和配置文件中的ethN一致,我的是eth0:1(1是key指定的值)

ifconfig eth0:1 192.168.58.59/24

如果指定错误 用此命令ifconfig eth0:1 down

重启mha 【db03】

masterha_stop –conf=/etc/mha/app1.cnf 先停

nohup masterha_manager –conf=/etc/mha/app1.cnf –remove_dead_master_conf –ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 &

[root@db03 ~]# masterha_check_status –conf=/etc/mha/app1.cnf

app1 (pid:19347) is running(0:PING_OK), master:192.168.58.52

4.MHA主从数据自动补足

Binlog Server(db03)

选择一个专门保存主库 binlog的服务器,必须要有mysqlbinlog命令,我们选择db03

创建目录用于存放二进制目录(db03)

mkdir -p /data/mha/binlog

chown -R mysql.mysql /data/*

cd /data/mha/binlog

mysqlbinlog -R –host=192.168.58.51 –user=mha –password=123456 –raw –stop-never mysql-bin.000001 &

vim /etc/mha/app1.cnf

[binlog1]

no_master=1

hostname=192.168.58.53

master_binlog_dir=/data/mha/binlog

注意:

拉取日志的起点,需要按照目前从库的已经获取到的二进制日志点为起点

重启MHA

masterha_stop –conf=/etc/mha/app1.cnf

nohup masterha_manager –conf=/etc/mha/app1.cnf –remove_dead_master_conf –ignore_last_failover < /dev/null > /var/log/mha/app1/manager.log 2>&1 &

masterha_check_status –conf=/etc/mha/app1.cnf

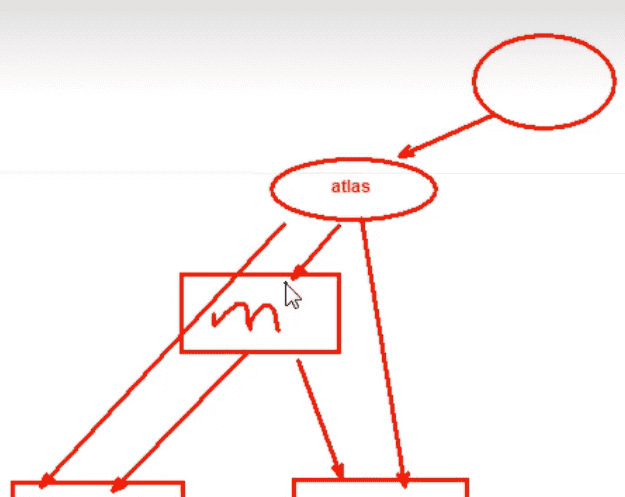

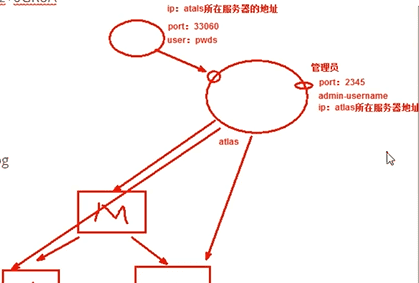

5.Atlas读写分离高性能架构

①Atlas部署读写分离架构(在db03配置)

rz 上传所需rpm文件

rpm -ivh Atlas-2.2.1.el6.x86_64.rpm

cd /usr/local/mysql-proxy/conf

mv test.cnf test.cnf.bak

cat > test.cnf <<EOF

[mysql-proxy]

admin-username = user

admin-password = pwd

proxy-backend-addresses = 192.168.58.59:3306

proxy-read-only-backend-addresses = 192.168.58.51:3306,192.168.58.53:3306

pwds = repl:/iZxz+0GRoA=,mha:/iZxz+0GRoA=

daemon = true

keepalive = true

event-threads = 8

log-level = message

log-path = /usr/local/mysql-proxy/log

sql-log=ON

proxy-address = 0.0.0.0:33060

admin-address = 0.0.0.0:2345

charset=utf8

EOF

*注解:

/usr/local/mysql-proxy/bin/encrypt 123456 密码加密

*atlas的管理用户(管理员使用的用户)

admin-username = user

admin-password = pwd

*写节点(也就是主库提供写操作)

proxy-backend-addresses = 192.168.58.55:3306

*读节点

proxy-read-only-backend-addresses = 192.168.58.51:3306,192.168.58.53:3306

*应用用户和管理用户

pwds = repl:3yb5jEku5h4=,mha:O2jBXONX098=

/usr/local/mysql-proxy/bin/encrypt 123456 —->制作加密密码

*其他

daemon = true 后台运行

keepalive = true 心跳检测

event-threads = 8 开启线程

log-level = message 日志级别

log-path = /usr/local/mysql-proxy/log 日志目录

sql-log=ON 记录sql语句

proxy-address = 0.0.0.0:33060 代理地址(对外提供服务)

admin-address = 0.0.0.0:2345 管理员使用的端口

charset=utf8 字符集

启动atlas

/usr/local/mysql-proxy/bin/mysql-proxyd test start

stop 关闭

ps -ef |grep proxy

6.读写分离架构应用

①Atlas功能测试

测试读操作:

登录atlas所在的数据库。客户端也需要这样配置数据库信息

mysql -umha -p123456 -h 192.168.58.53 -P 33060 必须经过atlas才能进行读写分离

测试读写是否分离:

db03 [(none)]>select @@server_id;

测试写操作:

mysql> begin;select @@server_id;commit;

②创建应用用户 zhangsan( select update insert) 密码123456

1. 在主库中,创建用户

grant select ,update,insert on . to zhangsan@‘192.168.58.%’ identified by ‘123456’;

2. 在atlas中添加生产用户

/usr/local/mysql-proxy/bin/encrypt 123456 —->制作加密密码

3. 改配置文件

vim test.cnf

pwds = repl:3yb5jEku5h4=,mha:O2jBXONX098=,zhangsan:/iZxz+0GRoA=

/usr/local/mysql-proxy/bin/mysql-proxyd test restart

[root@db03 conf]# mysql -uzhangsan -p123456 -h 192.168.58.53 -P 33060

7.Atlas在线管理

连接管理接口

远程 mysql -uuser -ppwd -h 192.168.58.53 -P2345

本地 mysql -uuser -ppwd -h127.0.0.1 -P2345

打印帮助:

mysql> select * from help;

查询后端所有节点信息:

mysql> SELECT * FROM backends;

临时关闭节点和启动

SET OFFLINE $backend_id

SET ONLINE $backend_id

动态添加删除节点

REMOVE BACKEND 3;

动态添加从库节点

ADD SLAVE 192.168.58.53:3306;

保存配置到配置文件

SAVE CONFIG;

8.读写分离避坑指南

主从延迟,主库是最新数据,从库是旧数据;

方案一:将这类查询,强制走主库进行读取数据,对于可以返回旧数据的查询走从库;

方案二:先判断主从是不是有延迟;

Seconds_Behind_Master: 0

Retrieved_Gtid_Set对比Executed_Gtid_Set

相关文章